Xen Project Software Overview

Содержание

- Introduction to Xen Project Architecture

- Guest Types

- Toolstack and Managment APIs

- I/O Virtualization in Xen

The Xen Project hypervisor is an open-source type-1 or baremetal hypervisor, which makes it possible to run many instances of an operating system or indeed different operating systems in parallel on a single machine (or host). The Xen Project hypervisor is the only type-1 hypervisor that is available as open source. It is used as the basis for a number of different commercial and open source applications, such as: server virtualization, Infrastructure as a Service (IaaS), desktop virtualization, security applications, embedded and hardware appliances. The Xen Project hypervisor is powering the largest clouds in production today.

Here are some of the Xen Project hypervisor’s key features:

- Small footprint and interface (is around 1MB in size). Because it uses a microkernel design, with a small memory footprint and limited interface to the guest, it is more robust and secure than other hypervisors.

- Operating system agnostic: Most installations run with Linux as the main control stack (aka "domain 0"). But a number of other operating systems can be used instead, including NetBSD and OpenSolaris.

- Driver Isolation: The Xen Project hypervisor has the capability to allow the main device driver for a system to run inside of a virtual machine. If the driver crashes, or is compromised, the VM containing the driver can be rebooted and the driver restarted without affecting the rest of the system.

- Paravirtualization: Fully paravirtualized guests have been optimized to run as a virtual machine. This allows the guests to run much faster than with hardware extensions (HVM). Additionally, the hypervisor can run on hardware that doesn’t support virtualization extensions.

This page will explore the key aspects of the Xen Project architecture that a user needs to understsand in order to make the best choices.

Introduction to Xen Project Architecture

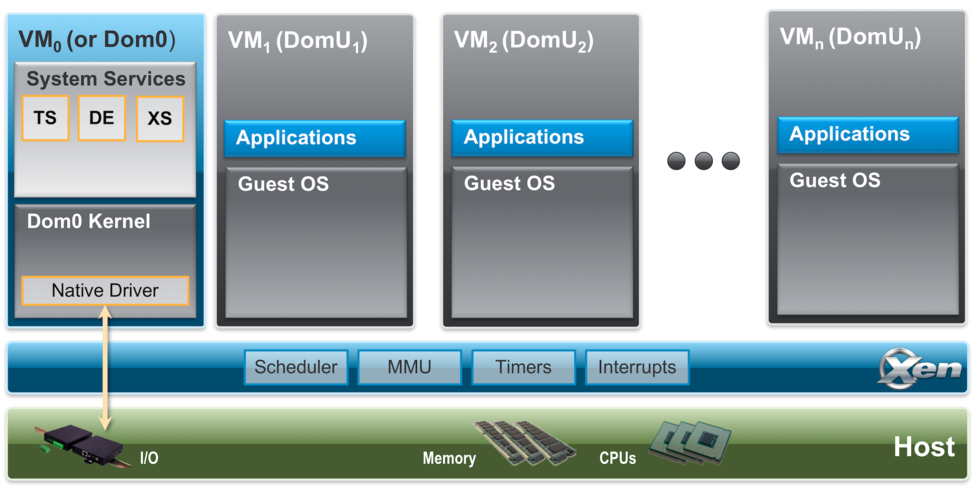

Below is a diagram of the Xen Project architecture. The Xen Project hypervisor runs directly on the hardware and is responsible for handling CPU, Memory, timers and interrupts. It is the first program running after exiting the bootloader. On top of the hypervisor run a number of virtual machines. A running instance of a virtual machine is called a domain or guest. A special domain, called domain 0 contains the drivers for all the devices in the system. Domain 0 also contains a control stack and other system services to manage a Xen based system. Note that through Dom0 Disaggregation it is possible to run some of these services and device drivers in a dedicated VM: this is however not the normal system set-up.

Components in detail:

- The Xen Project Hypervisor is an exceptionally lean (65KSLOC on Arm and 300KSLOC on x86) software layer that runs directly on the hardware and is responsible for managing CPU, memory, and interrupts. It is the first program running after the bootloader exits. The hypervisor itself has no knowledge of I/O functions such as networking and storage.

- Guest Domains/Virtual Machines are virtualized environments, each running their own operating system and applications. The hypervisor supports several different virtualization modes, which are described in more detail below. Guest VMs are totally isolated from the hardware: in other words, they have no privilege to access hardware or I/O functionality. Thus, they are also called unprivileged domain (or DomU).

- The Control Domain (or Domain 0) is a specialized Virtual Machine that has special privileges like the capability to access the hardware directly, handles all access to the system’s I/O functions and interacts with the other Virtual Machines. he Xen Project hypervisor is not usable without Domain 0, which is the first VM started by the system. In a standard set-up, Dom0 contains the following functions:

- System Services: such as XenStore/XenBus (XS) for managing settings, the Toolstack (TS) exposing a user interface to a Xen based system, Device Emulation (DE) which is based on QEMU in Xen based systems

- Native Device Drivers: Dom0 is the source of physical device drivers and thus native hardware support for a Xen system

- Virtual Device Drivers: Dom0 contains virtual device drivers (also called backends).

- Toolstack: allows a user to manage virtual machine creation, destruction, and configuration. The toolstack exposes an interface that is either driven by a command line console, by a graphical interface or by a cloud orchestration stack such as OpenStack or CloudStack. Note that several different toolstacks can be used with Xen

Guest Types

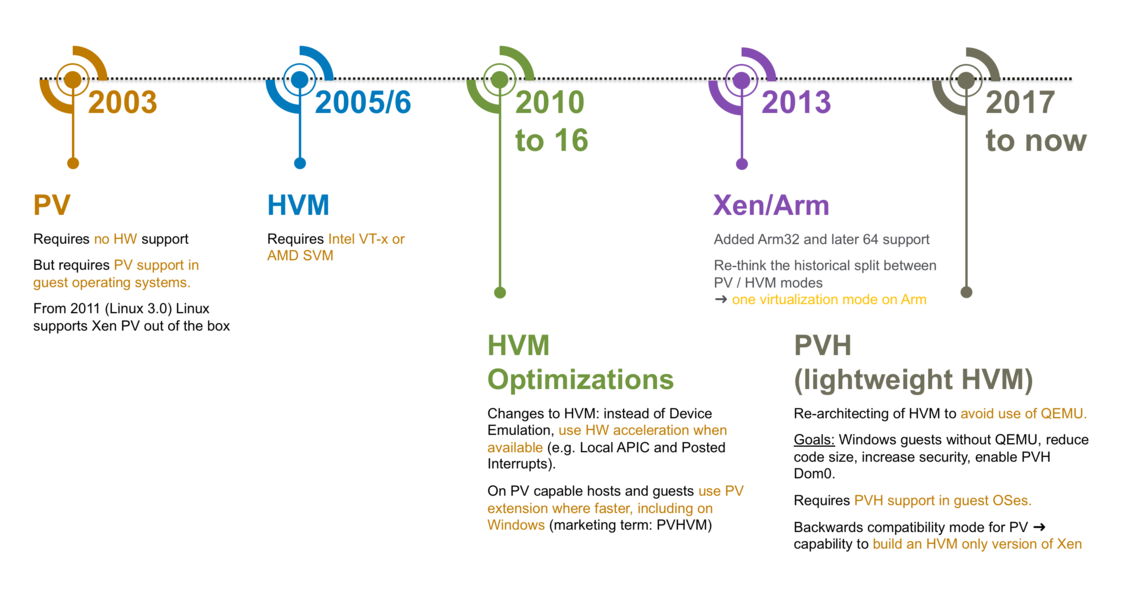

The following diagrams show how guest types have evolved for Xen.

On ARM hosts, there is only one guest type, while on x86 hosts the hypervisor supports the following three types of guests:

- Paravirtualized Guests or PV Guests: PV is a software virtualization technique originally introduced by the Xen Project and was later adopted by other virtualization platforms. PV does not require virtualization extensions from the host CPU, but requires Xen-aware guest operating systems. PV guests are primarily of use for legacy HW and legacy guest images and in special scenarios, e.g. special guest types, special workloads (e.g. Unikernels), running Xen within another hypervisor without using nested hardware virtualization support, as container host, …

- HVM Guests: HVM guests use virtualization extensions from the host CPU to virtualize guests. HVM requires Intel VT or AMD-V hardware extensions. The Xen Project software uses QEMU device models to emulate PC hardware, including BIOS, IDE disk controller, VGA graphic adapter, USB controller, network adapter, etc. HVM Guests use PV interfaces and drivers when they are available in the guest (which is usually the case on Linux and BSD guests). On Windows, drivers are available to download via our download page. When available, HVM will use Hardware and Software Acceleration, such as Local APIC, Posted Interrupts, Viridian (Hyper-V) enlightenments and make use of guest PV interfaces where they are faster. Typically HVM is the best performing option on for Linux, Windows, *BSDs.

- PVH Guests: PVH guests are lightweight HVM-like guests that use virtualization extensions from the host CPU to virtualize guests. Unlike HVM guests, PVH guests do not require QEMU to emulate devices, but use PV drivers for I/O and native operating system interfaces for virtualized timers, virtualized interrupt and boot. PVH guests require PVH enabled guest operating system. This approach is similar to how Xen virtualizes ARM guests, with the exception that ARM CPUs provide hardware support for virtualized timers and interrupts.

| IMPORTANT: Guest types are selected through builder configuration file option for Xen 4.9 or before and the type configuration file option from Xen 4.10 onwards in the (also see man pages). |

PV (x86)

Paravirtualization (PV) is a virtualization technique originally introduced by Xen Project, later adopted by other virtualization platforms. PV does not require virtualization extensions from the host CPU and is thus ideally suited to run on older Hardware. However, paravirtualized guests require a PV-enabled kernel and PV drivers, so the guests are aware of the hypervisor and can run efficiently without emulation or virtual emulated hardware. PV-enabled kernels exist for Linux, NetBSD and FreeBSD. Linux kernels have been PV-enabled from 2.6.24 using the Linux pvops framework. In practice this means that PV will work with most Linux distributions (with the exception of very old versions of distros).

HVM and its variants (x86)

Full Virtualization or Hardware-assisted virtualization (HVM) uses virtualization extensions from the host CPU to virtualize guests. HVM requires Intel VT or AMD-V hardware extensions. The Xen Project software uses Qemu to emulate PC hardware, including BIOS, IDE disk controller, VGA graphic adapter, USB controller, network adapter etc. Virtualization hardware extensions are used to boost performance of the emulation. Fully virtualized guests do not require any kernel support. This means that Windows operating systems can be used as a Xen Project HVM guest. For older host operating systems, fully virtualized guests are usually slower than paravirtualized guests, because of the required emulation.

To address this, the Xen Project community has upstreamed PV drivers and interfaces to Linux and other open source operating systems. On operating systems with Xen Support, these drivers and software interfaces will be automatically used when you select the HVM virtualization mode. On Windows this requires that appropriate PV drivers are installed. You can find more information at

HVM mode, even with PV drivers, has a number of things that are unnecessarily inefficient. One example are the interrupt controllers: HVM mode provides the guest kernel with emulated interrupt controllers (APICs and IOAPICs). Each instruction that interacts with the APIC requires a call into Xen and a software instruction decode; and each interrupt delivered requires several of these emulations. Many of the paravirtualized interfaces for interrupts, timers, and so on are available for guests running in HVM mode: when available in the guest — which is true in most modern versions of Linux, *BSD and Windows — HVM will use these interfaces. This includes Viridian (i.e. Hyper-V) enlightenments which ensure that Windows guests are aware they are virtualized, which speeds up Windows workloads running on Xen.

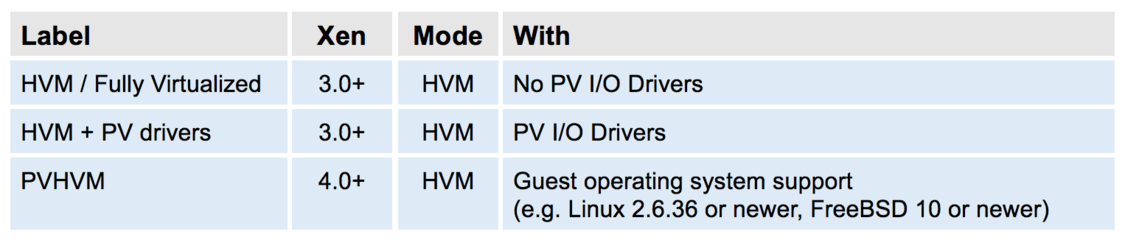

When HVM improvements were introduced, we used marketing labels to describe HVM improvements. This seemed like a good strategy at the time, but has since created confusion amongst users. For example, we talked about PVHVM guests to describe the capability of HVM guests to use PV interfaces, even though PVHVM guests are just HVM guests. The following table gives an overview of marketing terms that were used to describe stages in the evolution of HVM, which you will find occasionally on the Xen wiki and in other documentation:

Compared to PV based virtualization, HVM is generally faster.

PVH (x86)

A key motivation behind PVH is to combine the best of PV and HVM mode and to simplify the interface between operating systems with Xen Support and the Xen Hypervisor. To do this, we had two options: start with a PV guest and implement a "lightweight" HVM wrapper around it (as we have done for ARM) or start with a HVM guest and remove functionality that is not needed. The first option looked more promising based on our experience with the Xen ARM port, than the second. This is why we started developing an experimental virtualization mode called PVH (now called PVHv1) which was delivered in Xen Project 4.4 and 4.5. Unfortunately, the initial design did not simplify the operating system — hypervisor interface to the degree we hoped: thus, we started a project to evaluate a second option, which was significantly simpler. This led to PVHv2 (which in the early days was also called HVMLite). PVHv2 guests are lightweight HVM guests which use Hardware virtualization support for memory and privileged instructions, PV drivers for I/O and native operating system interfaces for everything else. PVHv2 also does not use QEMU for device emulation, but it can still be used for user-space backends (see PV I/O Support)..

PVHv1 has been replaced with PVHv2 in Xen 4.9, and has been made fully supported in Xen 4.10. PVH (v2) requires guests with Linux 4.11 or newer kernel.

-

(Xen 4.10+)

- Currently PVH only supports Direct Kernel Boot. EFI support is currently being developed.

ARM Hosts

On ARM hosts, there is only one virtualization mode, which does not use QEMU.

Summary

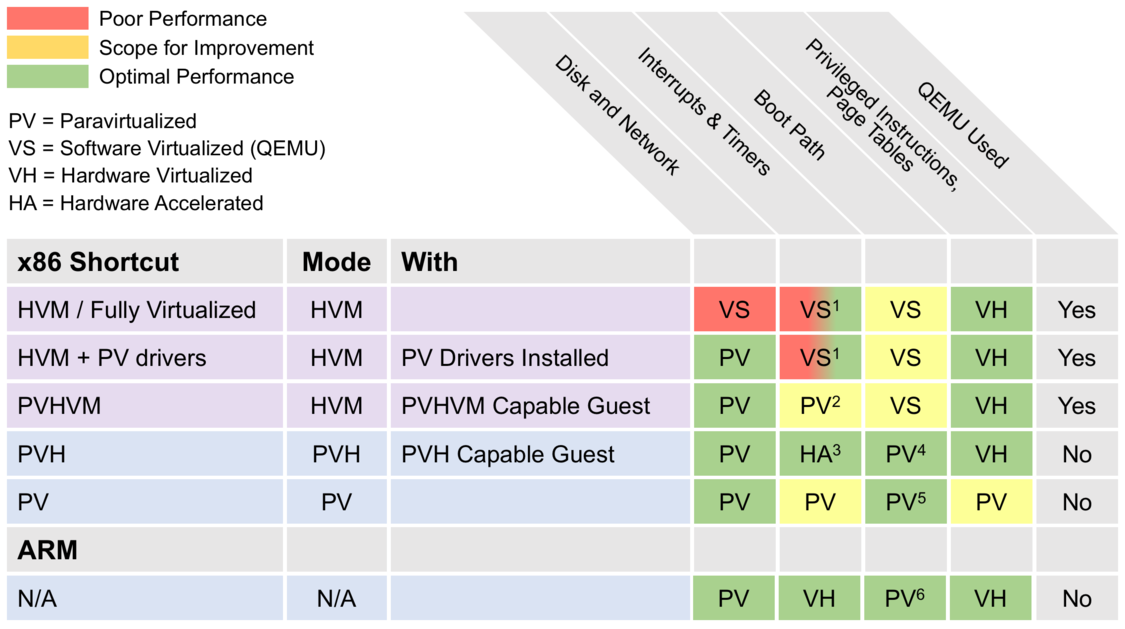

The following diagram gives an overview of the various virtualization modes implemented in Xen. It also shows what underlying virtualization technique is used for each virtualization mode.

- Uses QEMU on older hardware and hardware acceleration on newer hardware – see 3)

- Always uses Event Channels

- Implemented in software with hardware accelerator support from IO APIC and posted interrupts

- PVH uses Direct Kernel Boot or PyGrub. EFI support is currently being developed.

- PV uses PvGrub for boot

- ARM guests use EFI boot or Device Tree for embedded applications

From a users perspective, the virtualization mode primarily has the following effects:

- Performance and memory consumption will differ depending on virtualization mode

- A number of command line and config options will depend on the virtualisation mode

- The boot path and guest install on HVM and PV/PVH are different: the workflow of installing guest operating systems in HVM guests is identical to installing real hardware, whereas installing guest OSes in PV/PVH guests differs. Please refer to the boot/install section of this document

Toolstack and Managment APIs

Xen Project software employs a number of different toolstacks. Each toolstack exposes an API, against which a different set of tools or user-interface can be run. The figure below gives a very brief overview of the choices you have, which commercial products use which stack and examples of hosting vendors using specific APIs.

Boxes marked in blue are developed by the Xen Project

The Xen Project software can be run with the default toolstack, with Libvirt and with XAPI. The pairing of the Xen Project hypervisor and XAPI became known as XCP which has been superceded by open source XenServer and XCP-ng. The diagram above shows the various options: all of them have different trade-offs and are optimized for different use-cases. However in general, the more on the right of the picture you are, the more functionality will be on offer.

Which to Choose?

The article Choice of ToolStacks gives you an overview of the various options, with further links to tooling and stacks for a specific API exposed by that toolstack.

For the remainder of this document, we will however assume that you are using the default toolstack with it’s command line tool XL. These are described in the project’s Man Pages. xl has two main parts:

- The xl command line tool, which can be used to create, pause, and shutdown domains, to list current domains, enable or pin VCPUs, and attach or detach virtual block devices. It is normally run as root in Dom0

- Domain configuration files, which describe per domain/VM configurations and are stored in the Dom0 filesystem

I/O Virtualization in Xen

The Xen Project Hypervisor supports the following techniques for I/O Virtualization:

- The PV split driver model: in this model, a virtual front-end device driver talks to a virtual back-end device driver which in turn talks to the physical device via the (native) device driver. This enables multiple VMs to use the same Hardware resource while being able to re-use native Hardware support. In a standard Xen configuration, (native) device drivers and the virtual back-end device drivers reside in Dom0. Xen does allow running device drivers in so-called driver domains. PV based I/O virtualization is the primary I/O virtualization method for disk and network, but there are a host of PV drivers for DRM, Touchscreen, Audio, … that have been developed for non-server use of Xen. This model is independent of the virtualization mode used by Xen and merely depends on the presence of the relevant drivers. These are shipped with Linux and *BSD out-of-the box. For Windows drivers have to be downloaded and installed into the guest OS.

- Device Emulation Based I/O: HVM guests emulate hardware devices in software. In Xen, QEMU is used as Device Emulator. As the performance overhead is high, Device Based emulation is normally only used during system boot or installation and for low-bandwidth devices.

- Passthrough: allows you to give control of physical devices to guests. In other words, you can use PCI passthrough to assign a PCI device (NIC, disk controller, HBA, USB controller, firewire controller, sound card, etc) to a virtual machine guest, giving it full and direct access to the PCI device. Xen supports a number of flavours of PCI passthrough, including VT-d passthrough and SR-IOV. However note that using passthrough has security implications, which are well documented here.

PV I/O Support

The following two diagrams shows two variants of the PV split driver model as implemented in Xen:

Источник: